Introduction to Agentic AI & Frameworks

Artificial Intelligence is evolving beyond simple question-answering systems into autonomous agents capable of complex reasoning, tool usage, and multi-step problem solving. This shift toward “agentic AI” represents a fundamental change in how we build AI applications. In this blog series, I’ll guide you through building and deploying your first AI agent using modern frameworks like Strands and LangGraph, deploying your agent to the web (Amazon Bedrock AgentCore), and extending capabilities with RAG knowledge bases and custom MCP tools. Strap in and join me on this multi-part blog series!

TL;DR: This series teaches you to build AI agents using Strands and LangGraph, deploy to AWS Bedrock AgentCore, and extend with RAG and MCP tools. Post 1 covers fundamentals and the framework landscape. Code examples on GitHub.

What is Agentic AI?

Large Language Models (LLMs): The Foundation

Before diving into agents, let’s briefly cover the foundation: Large Language Models (LLMs). These are AI models trained on vast amounts of text data that can understand and generate human-like text. Examples include:

- OpenAI’s GPT models (GPT-5, GPT-4o, GPT-4o-mini)

- Anthropic’s Claude models (via Anthropic API or Amazon Bedrock)

- Meta’s Llama models (run locally on your device via Ollama)

- Google’s Gemini models

- Many others from providers like Mistral, Cohere, etc.

LLMs are powerful for answering questions and generating text, but they have limitations: they can’t access real-time data, perform calculations reliably, or take actions in the real world. This is where agentic AI comes in.

From LLMs to Agents

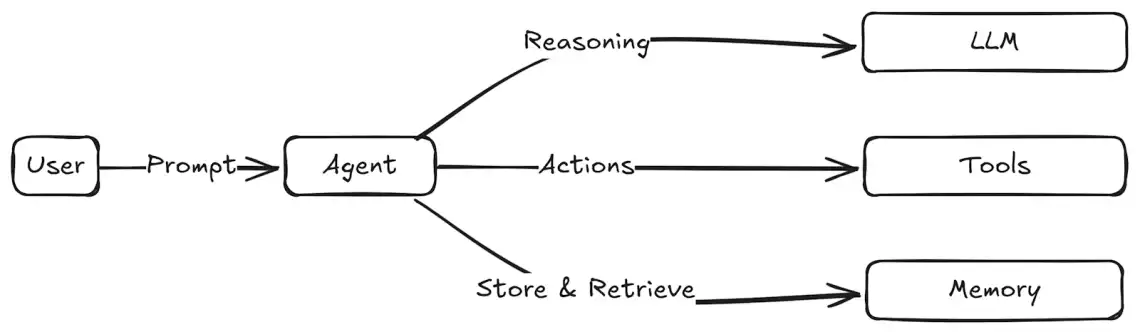

Agentic AI refers to AI systems that can autonomously plan, reason, and take actions to achieve goals. Unlike traditional chatbots that simply respond to prompts, agents can:

- Break down complex tasks into manageable steps

- Use external data, tools and APIs to gather information or perform actions

- Maintain context and memory across interactions

- Take decisions based on reasoning and available information

- Iterate and adapt their approach based on feedback

Think of an agent as an AI assistant that doesn’t just answer questions but can actually help you accomplish tasks by orchestrating multiple steps and tools.

Instead of just hallucinating an answer or telling you that it cannot assist, an agent can check a weather API, analyze your calendar, and suggest whether you should reschedule your outdoor meeting.

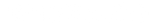

Core Components of AI Agents

The Agent Loop

At the heart of every agent is the agent loop - a cycle of reasoning, action, and observation:

- Reasoning: The agent analyses the current state and decides what to do next

- Action: The agent executes a tool or generates a response

- Observation: The agent processes the results and updates its understanding

- Repeat: The cycle continues until the goal is achieved

This loop enables agents to handle complex, multi-step workflows that would be impossible with a single call to a Large Language Model (LLM).

Tools and Function Calling

Tools extend an agent’s capabilities beyond text generation. An agent can use tools to:

- Query databases or APIs

- Perform calculations

- Search the web

- Execute code

- Interact with external systems

Modern LLMs support function calling, allowing them to intelligently select and invoke the right tools based on the task at hand. We’ll explore building agents with tools in the next post of this series.

The Agentic Framework Ecosystem

Building agents from scratch is complex. Fortunately, a rich ecosystem of frameworks has emerged to abstract away the boilerplate and provide battle-tested patterns. While this series focuses on Strands and LangGraph, it’s worth knowing about some of the options available to you:

Popular Agentic Frameworks

Strands (AWS) - A production-ready framework with minimal boilerplate, native Bedrock integration, and built-in deployment utilities for AWS. Emphasizes simplicity and rapid development.

LangGraph (LangChain) - Graph-based agent workflows with fine-grained control over state transitions and decision flows. Part of the extensive LangChain ecosystem with broad community support.

CrewAI - A role-playing multi-agent framework where agents work together like a crew, each with specific roles and responsibilities. Ideal for collaborative task execution.

AutoGen (Microsoft) - Enables building multi-agent conversations with customizable and conversable agents. Strong focus on agent-to-agent communication patterns.

Semantic Kernel (Microsoft) - An SDK that integrates LLMs with conventional programming languages, emphasizing enterprise integration and plugin architecture.

LlamaIndex - Originally focused on data indexing and retrieval, now includes powerful agentic capabilities with data agents that can reason over structured and unstructured data.

Swarm (OpenAI) - A lightweight experimental framework for multi-agent orchestration, emphasizing simplicity and handoffs between agents.

There are too many frameworks to list them all (Pydantic AI, Smolagents, …) and each framework has its strengths. The choice depends on your specific use case, existing tech stack, and architectural preferences. For this series, we’ll focus on Strands and LangGraph due to their strong AWS integration and production-ready features.

Strands vs LangGraph: Our Focus Frameworks

Strands

Strands is a Python framework designed for building production-ready AI agents with minimal code. Key features include:

- Simple, intuitive SDK for defining agents and tools

- Built-in support for streaming responses

- Native integration with Amazon Bedrock models

- Support for multiple model providers (Bedrock, OpenAI, Ollama, Anthropic, Cohere, and more)

- Multi-agent patterns (orchestration, swarm, workflow)

- Deployment utilities for AWS services

Strands is ideal if you want to quickly build and deploy agents without getting bogged down in implementation details. This was my first framework and I still enjoy its simplicity.

Try it yourself: Run the example code to see real agents in action with both Strands and LangGraph.

# Simple Strands agent with Ollamafrom strands import Agentfrom strands.models.ollama import OllamaModelmodel = OllamaModel(host="http://localhost:11434", model_id="llama3.2")agent = Agent(model=model)response = agent("Tell me about Cape Town")print(response)

LangGraph

LangGraph, part of the LangChain ecosystem, provides a graph-based approach to building agents:

- Define agent workflows as directed graphs

- Fine-grained control over agent behavior and state transitions

- Support for complex, branching logic

- Support for multiple model providers (OpenAI, Bedrock, Ollama, Anthropic, Google, and 100+ others)

- Extensive ecosystem of integrations

LangGraph offers more flexibility and control, making it suitable for complex, custom agent architectures.

LangChain vs LangGraph: LangChain is a framework for building LLM applications with chains and components. LangGraph extends this with stateful, graph-based workflows specifically designed for agentic systems. Think of LangChain as the foundation and LangGraph as the specialized tool for building agents with complex decision flows.

# Simple LangGraph agent with Ollamafrom langchain.agents import create_agentfrom langchain_ollama import ChatOllamamodel = ChatOllama(model="llama3.2", base_url="http://localhost:11434")agent = create_agent(model, tools=[])response = agent.invoke({"messages": [("user", "Tell me about Cape Town")]})print(response["messages"][-1].content)

Quick Comparison

| Feature | Strands | LangGraph |

|---|---|---|

| Learning Curve | Easiest - simple API, model-first approach | Steeper - requires graph thinking |

| Architecture | Model-first: describe goals, agent reasons | Graph-based: explicit nodes and edges |

| AWS Integration | Native Bedrock integration, AWS-optimized | Works with AWS but not native |

| Tool Integration | Built-in MCP support, massive tool library | LangChain ecosystem integration |

| Multi-Agent | Orchestration, swarm, workflow patterns | Graph-based coordination |

| Production Ready | AWS-backed, used internally at Amazon | Battle-tested, widely deployed |

| Deployment | Local, AgentCore, self-hosted | Local, AgentCore, LangGraph Cloud, self-hosted |

| Code Style | Minimal boilerplate, declarative | More explicit control, imperative |

| Best For | Rapid development, portable deployments | Complex workflows, fine-grained control |

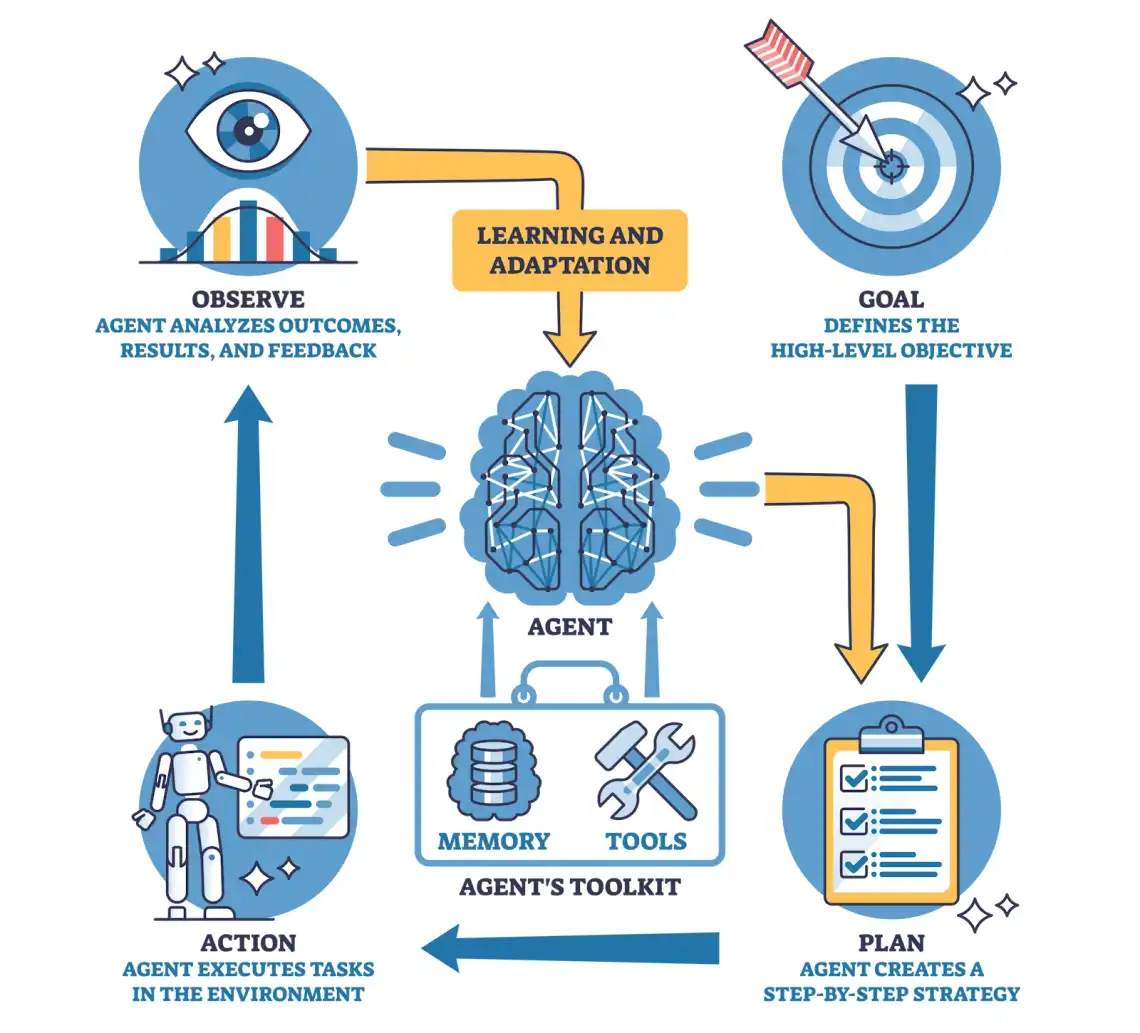

Amazon Bedrock AgentCore

Once you’ve built an agent, you need somewhere to run it. You can run agents anywhere, but serverless (💙) platforms like Amazon Bedrock AgentCore eliminate infrastructure management:

- Serverless runtime: Deploy agents without managing infrastructure

- Built-in memory: Persistent conversation and semantic memory

- Tool connectivity: Integrate with AWS services and custom APIs

- Security: IAM-based access control and VPC support

- Observability: Built-in logging, metrics, and tracing

AgentCore bridges the gap between development and production, providing enterprise-grade infrastructure for agentic applications.

Extending Agents with RAG and Emerging Protocols

Retrieval-Augmented Generation (RAG)

RAG enhances agents with domain-specific knowledge by:

- Storing documents in vector databases (knowledge bases)

- Retrieving relevant context based on user queries

- Augmenting LLM prompts with retrieved information

This allows agents to answer questions about your specific data without fine-tuning models, which is costly and compute-intensive. Also, searches are no longer matching strings like in the early days of search engines - agents can perform semantic searches on your knowledge base, which is great when paired with a good embeddings model. More on that later in this series!

Model Context Protocol (MCP)

Backstory: It was May 2025 and my LinkedIn feed was flooded with this new “MCP-thing”. It piqued my interest and a few vibe-coding sessions later and I was hooked into the realm of agentic AI!

MCP has become the standard for connecting AI agents to external tools and data sources. With MCP:

- Define tools through MCP servers

- Agents discover and use tools dynamically

- Share tools across different agent implementations

- Add context to agents and provide prompt templates (less often used - everyone associates MCP with tools)

FastMCP makes it easy to build custom MCP servers in Python, enabling you to expose any API or functionality as an agent tool.

Agent-to-Agent Protocol (A2A)

As multi-agent systems become more common, standardized communication between agents is crucial. The Agent-to-Agent (A2A) protocol enables:

- Seamless communication between agents across different platforms

- Standardized message formats for agent collaboration

- Interoperability between frameworks (Strands, LangGraph, etc.)

We’ll explore A2A in depth when we cover multi-agent architectures later in this series.

What’s Next in This Series

This is the first post in a series on building agentic AI applications. I’ll be publishing new posts weekly, covering topics including:

- Building your first agent with Strands

- Building your first agent with LangGraph

- Deploying agents to Amazon Bedrock AgentCore

- Adding RAG knowledge bases to your agents

- Building custom MCP tools with FastMCP

- Multi-agent architectures and orchestration (including A2A protocol)

- …and more as the series evolves!

Each post will include practical code examples that you can follow along with. All code will be available in a GitHub repository.

Getting Started

To follow along with this series, you’ll need:

- Python 3.10 or later

- Basic familiarity with Python and APIs

- A code editor or IDE

Choose your model provider (pick one or more):

- Ollama (free, runs locally on your laptop)

- Amazon Bedrock (cloud, requires AWS account with model access)

- OpenAI (cloud, requires API key and credits)

In the next post, we’ll dive into building your first agent with Strands, starting with a simple conversational agent and progressively adding tools and capabilities.

Why Learn Agentic AI?

Agentic AI is rapidly becoming the standard for building sophisticated AI applications. Whether you’re building customer support systems, data analysis tools, or automation workflows, understanding how to build and deploy agents is an essential skill for modern developers.

The frameworks and platforms covered in this series represent the cutting edge of AI development, and mastering them will position you to build the next generation of intelligent applications.

Table Of Contents

Topics

Related Posts

Quick Links

Legal Stuff